Future Technology Predictions

The main force that drives progress in the interface space is reducing the burden of manipulating reality (AKA interaction cost). We see three long-term UX trends that contribute to reducing that burden:

- improving on brain-body-world interface (by means of multimodality)

- improving brain-world interface

- reducing the number of interactions

Read also: What is gamification and how to design it

I. Brain-Body-World interface

Our first interface is our body. It is a connection between the brain and reality. This interface has to be learned. A newborn’s arms are almost like foreign instruments which he gradually learns to use. Newborns sometimes wake themselves up because their hands are hitting or scratching their face. Newborns don’t understand the causality between what’s happening to their face and what’s happening to their hands (“Oh, these are my own hands, I could just stop doing that to my face” – said no newborn ever…).

Every time a new interface is added on top of the brain-body interface, there is a cost involved.

This cost is low for simple tools like stones (smash, grind), or a hammer. If you ever wielded a stone, or a stick, you can start using a hammer.

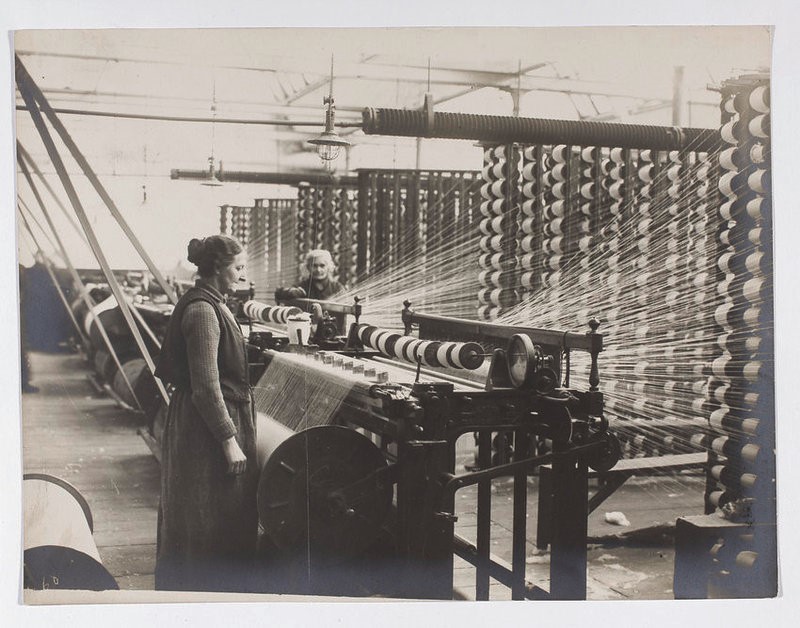

The cost gets higher as tools become more complex. Take a spinning machine. It’s magic! It is fed with spools of thread, it binds them together – and a sheet of textile is thus produced. To accomplish this, the machine has to make a series of tiny movements in the proper sequence. Compare it with a hammer that accomplishes its task (sinking a nail) in one or two swings.

As tools execute more complex manipulations of reality, there are more and more abilities you have to add on top of the brain-body interface to understand and operate them. The spinning machine is harder to use than a hammer (the spinning machine requires more abilities on top of the brain-body interface than a hammer). The operator needs to do things in sequence for the machine to work properly.

And then we have even more complex interfaces with tools that project our thoughts outside of our heads into reality. Computers in a designer’s work are just that – a tool to project thoughts outside our heads into the reality. They require all basic brain-body interface capabilities as well as knowledge on how to operate them. Computers allow us freedom in creating simulated realities – variants of our designs – which we can gaze upon, modify and judge by looking at them and running simulations in our heads (“does this work here?”).

Affecting reality is always a cost, a burden. This cost is shared between the operator (you) and the tool you are using. Complex manipulation requires more effort than simple manipulation. Whether manipulating reality is complex or simple, it is always between the tool and the operator to bear this burden. This burden is expressed to the operator in terms of user interface complexity (more burden = more complexity in the UI).

- A hammer – simple manipulation required, thus a simple interface required with a hammer.

- A spinning machine – complex manipulation of reality (more operations required) – thus the interface becomes more complex (putting more burden between the machine and the user – here the user needs some understanding of how the machine works, has to tend to it, operate it in the proper sequence and be wary of whether the machine is working well).

- A mainframe computer – even more complex manipulation of reality (infinitely more operations happening) so the interface becomes more complex (putting some of the burden onto the user – his understanding of how machine works and in what sequence it performs operations, so he knows what buttons to push at which times).

An undying trend in interface design is to diminish the burden for the operator. This burden is diminished when these things happen:

- The operator can use his pre-existing skills and abilities to successfully use the machine (e.g. when natural language or gestures that in real life mean something to the operator, also mean similar things to the machine, or when visual hierarchy helps take in feedback from the machine).

- There’s a lot of bandwidth to control the machine (the operator doesn’t have to rely only on mouse and keyboard but can use other modalities to feed data to the machine).

- The machine does more things by itself without the need for the operator to pay attention to it. One example would be a Thermomix – you put all the ingredients in, put on a program, and the machine does everything for you. Contrast this with a regular mixer & a pot combination, where first you mix, and then you put things into the pot, and then you keep track of the cooking process. An advanced example: AI perfectly isolating pictures (cutting out from the background) so you don’t have to do it. Or even more – AI automatically isolating different elements in a photo so you can drag and drop them without even having to request the isolation.

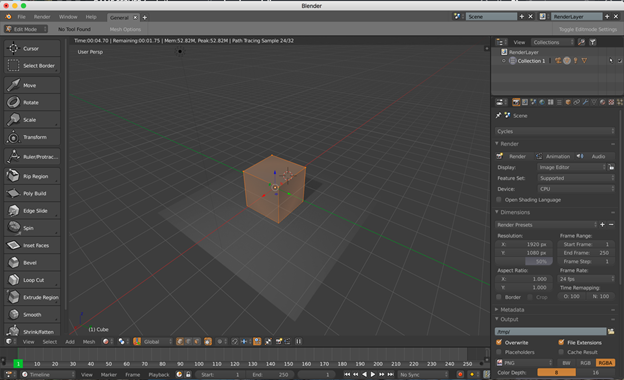

A strong UX trend is moving toward multi-modal interfaces: looking at computers, you can see that the input mechanisms are mainly mouse, keyboard and tablet. So, to create a reality inside of a computer, you are using a small part of your brain-body interface (a small set of hand movements). And that small set of operations (hand movements) has to be then translated into many operations within the interface. That’s why software for 3d design is so loaded with different options, menus and submenus: because there’s a small number of input commands that the software interface has to translate into many commands (enough to build 3d objects). It is like writing an essay, using a combination of five keys to codify 24 letters of the alphabet.

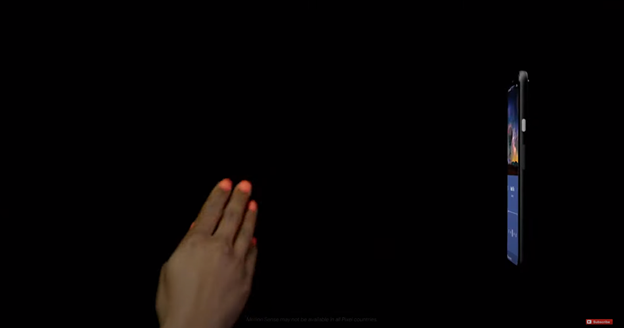

Now imagine that you are designing a 3d object in a 3d environment, and you can manipulate objects with your hands (like in the real world). That’s a whole lot more input commands that you can articulate. Thus there are somewhat fewer menus and submenus needed. Imagine that you add voice command abilities and you have even a broader bandwidth of input (even fewer submenus needed).

Multimodality is the future of UX – if you could interact with this 3d object using your innate abilities (moving, turning it around with your hands, using voice commands) the interface could “come” to you instead of your having to understand all the options, toolbars and where everything is hidden. You could state your intentions for the object and the visual interface would change to accommodate such a use-case (e.g. by highlighting functions you will need to do that).

You could say that we are now living in an era, when we will be able to manipulate these man-made environments more and more with touch, gestures, voice, gaze and thought. Caveat: you need to judge which type of input is better suited to a particular operation. Example: if you watch TV and are flicking through the channels, then pushing a tiny button with a tip of your finger is more convenient than shouting voice commands, or waving your arm so that the TV can “see” you. So it is not about being multi-modal for the sake of it. It is about tapping into the brain-body-world interface when it makes things easier and more efficient for the user.

Read also: Mobile apps in the service of mental health

II. The Brain-World Interface: Bypassing the body

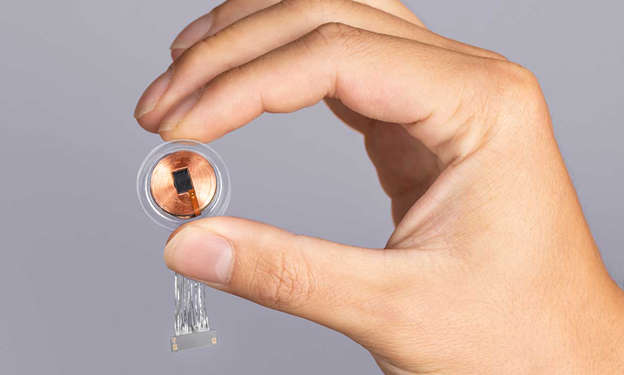

The brain-machine interface is a parallel interface to the brain-body interface. In theory, it can connect your brain to the world (rendering the body unnecessary for this purpose). It can also fix a loss of brain-body functions, in cases where the original hardware of the brain-body interface has been damaged. Is this what a future user interface will look like?

Even looking far into the future, we may conclude that the body becoming unnecessary for the brain to function is still the domain of science fiction. Much closer to reality is the prospect of utilising the brain-world interface for some tasks and for simple tasks first of all. Example: you are designing a scene in Photoshop and you change the type of brush you are using by thinking of it (yeah, I know it sounds like a gross under-utilisation of such an interface, but I believe that in the first days of such interfaces we will be looking at simple applications).

Here’s what scientists are saying about it:

While an ideal BCI would allow communication using arbitrary conscious activity, so far only a few direct brain communication paradigms have been invented. [For example] The control strategy of MI-BCIs [motor imagery Brain-Computer Interfaces] requires the users to consciously imagine performing a bodily movement. (…) Training is usually needed to achieve reasonable accuracy in control of the MI-BCI (…) human participant trains with the help of neurofeedback. Meanwhile, the machine learning algorithms at the computer’s end learn the participant’s ERD patterns (event-related desynchronisation of neural rhythms over the motor cortex) to interpret what is going on. This process is called co-adaptation (source here).

III. The future of UI: Reducing the interface – Minimizing the number of interactions, so less interface is needed

In some way it is the holy grail of UX design trends – make things happen for the users without an ounce of effort from their side. Example: cutting out pictures in Photoshop. Some time ago, you had to do it by hand. Now AI does it almost perfectly, saving you time and effort. But what if Photoshop pre-selected all the elements in the picture and had them ready for you to use? Imagine uploading a picture to Photoshop and just moving things around – without even needing to isolate them first. That would be neat.

Think of a reduced interface in Uber: it is already very convenient. You don’t have to worry about explaining your location, paying the driver etc. But we could imagine further improvements: for example. getting an Uber without needing to call for it. You get out the door and Uber is writing for you, because your home AI observed your state of preparedness and ordered the car at the right moment in time. This is still science-fiction, but it might be possible some day. It may be the future of UX design: finding more and more interactions we can cut out from the user experience altogether.

One disadvantage of such a situation: exposing yourself to being constantly observed and analysed by machines (to the point that they know more about you than you do yourself).

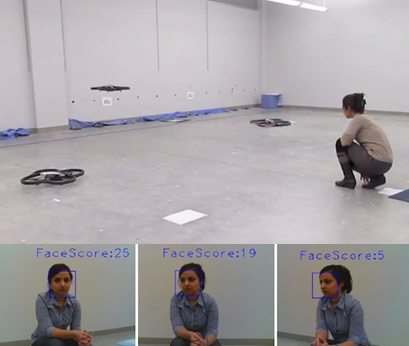

One of the key factors that interface reduction relies on is determining intent. If the machine “knows” what the operator tries to accomplish, it can just do that for them. One of the ways to determine intent is observing the user’s face, looking where the user is looking. Or, imagine you are working with Photoshop, and you want to select an object and you can do just that by looking at the object.

IV. Current UX trends

We are Artegence, and we help clients design and develop software tools that consumers love using. We keep an eye on emerging technologies, but what we really focus on are things that do not change with the seasons: how people think about the world, how they interact with it and how they reach the decision to purchase your products.

Thanks to this approach, the process of building the websites, creating graphic designs, communication and functional backlog feels like a treat, not a chore. If you are thinking of building software, give us a call.